|

We are hiring! |

Research and Selected PublicationsRecently, I have not been an active researcher on public topics. However, I remain deeply interested in deep learning, computer vision, multi-modality learning, and video understanding. I am passionate about building machine learning models that understand the physical world in a way similar to humans. |

|

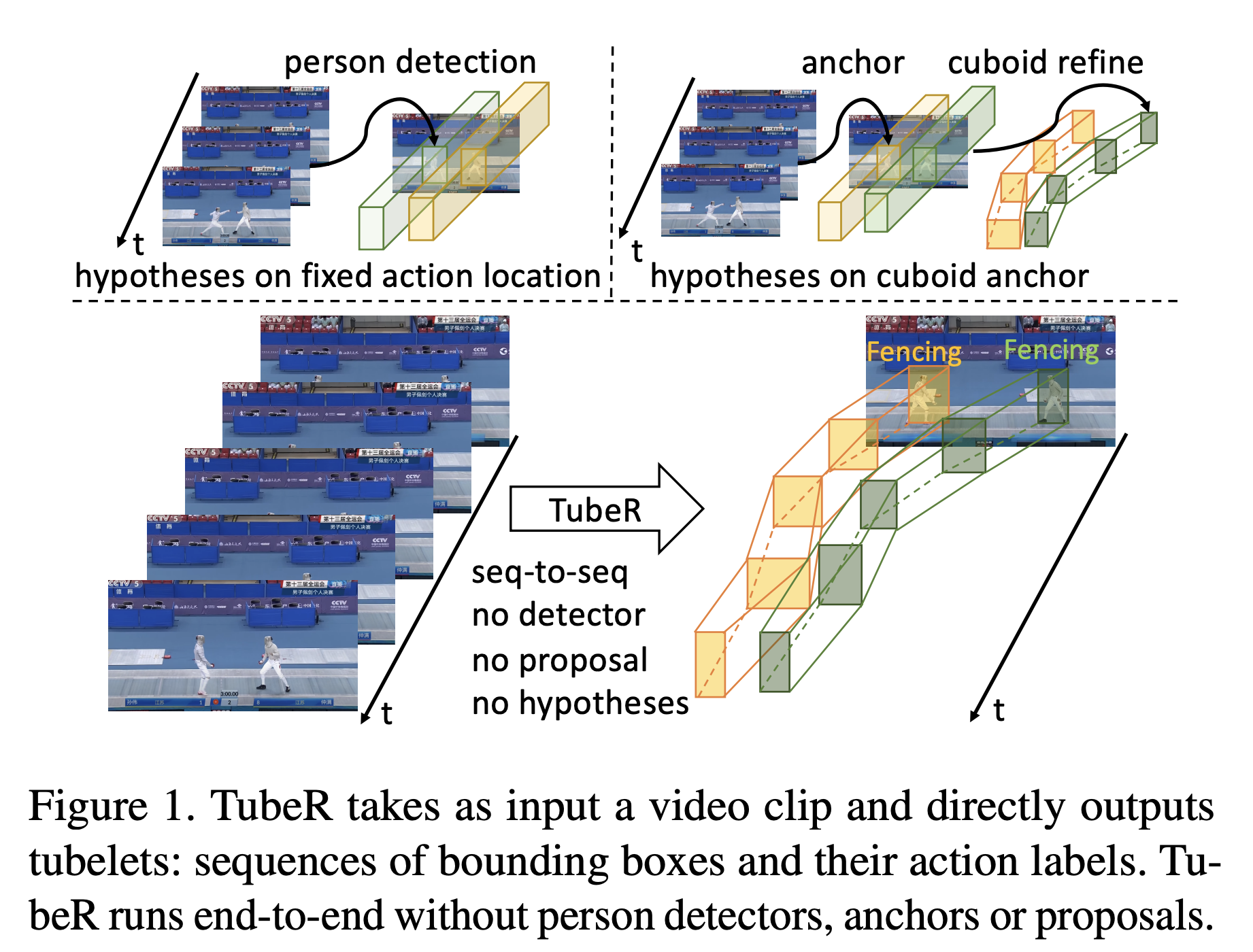

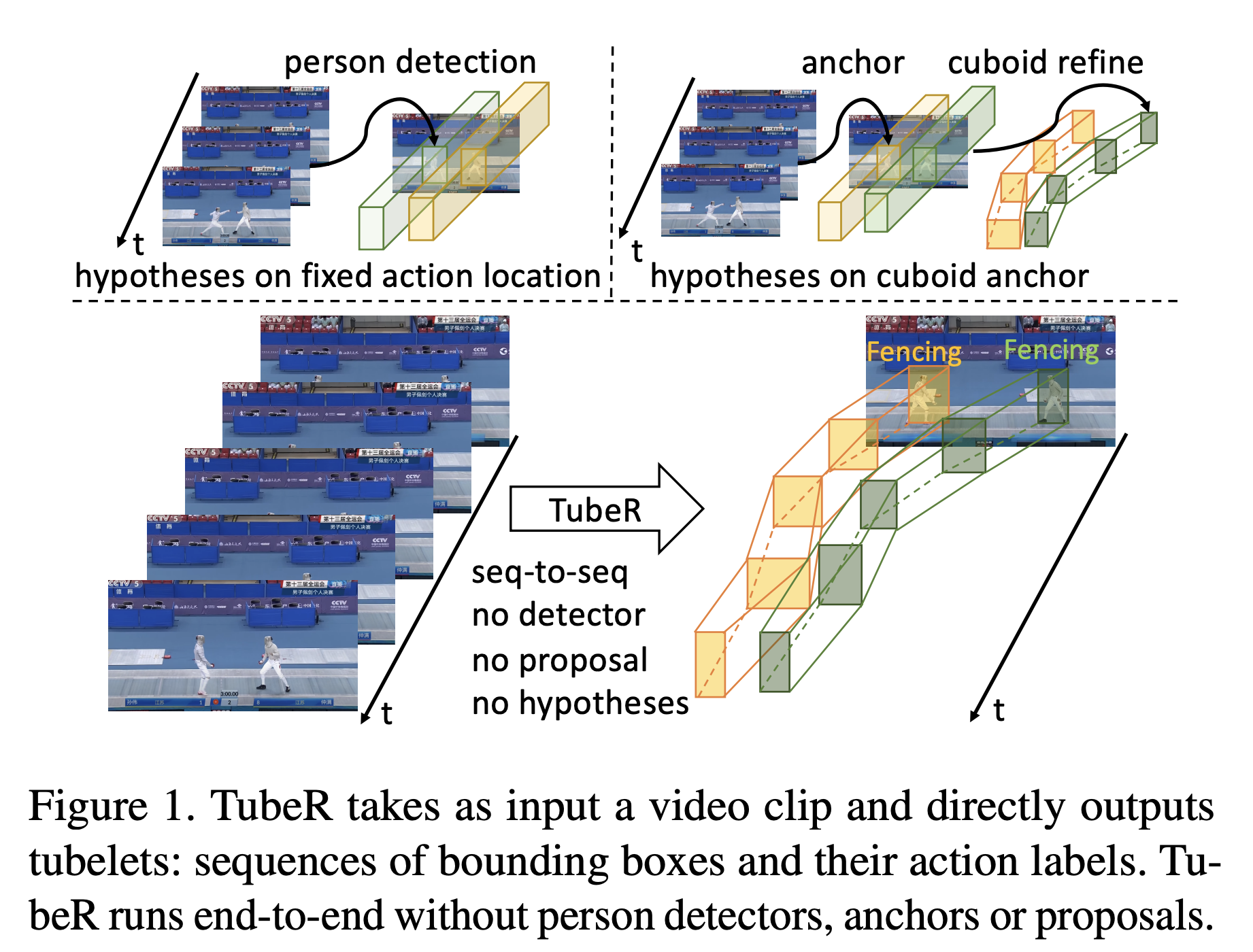

Tuber: Tubelet transformer for video action detection

Jiaojiao Zhao, Yanyi Zhang, Xinyu Li, Hao Chen, Bing Shuai, Mingze Xu, Chunhui Liu, Kaustav Kundu, Yuanjun Xiong, Davide Modolo, Ivan Marsic, Cees GM Snoek, Joseph Tighe CVPR, 2022 Code / Paper The first SOTA transformer model on Action Detections, using learnable queries as tubelet proposals. |

|

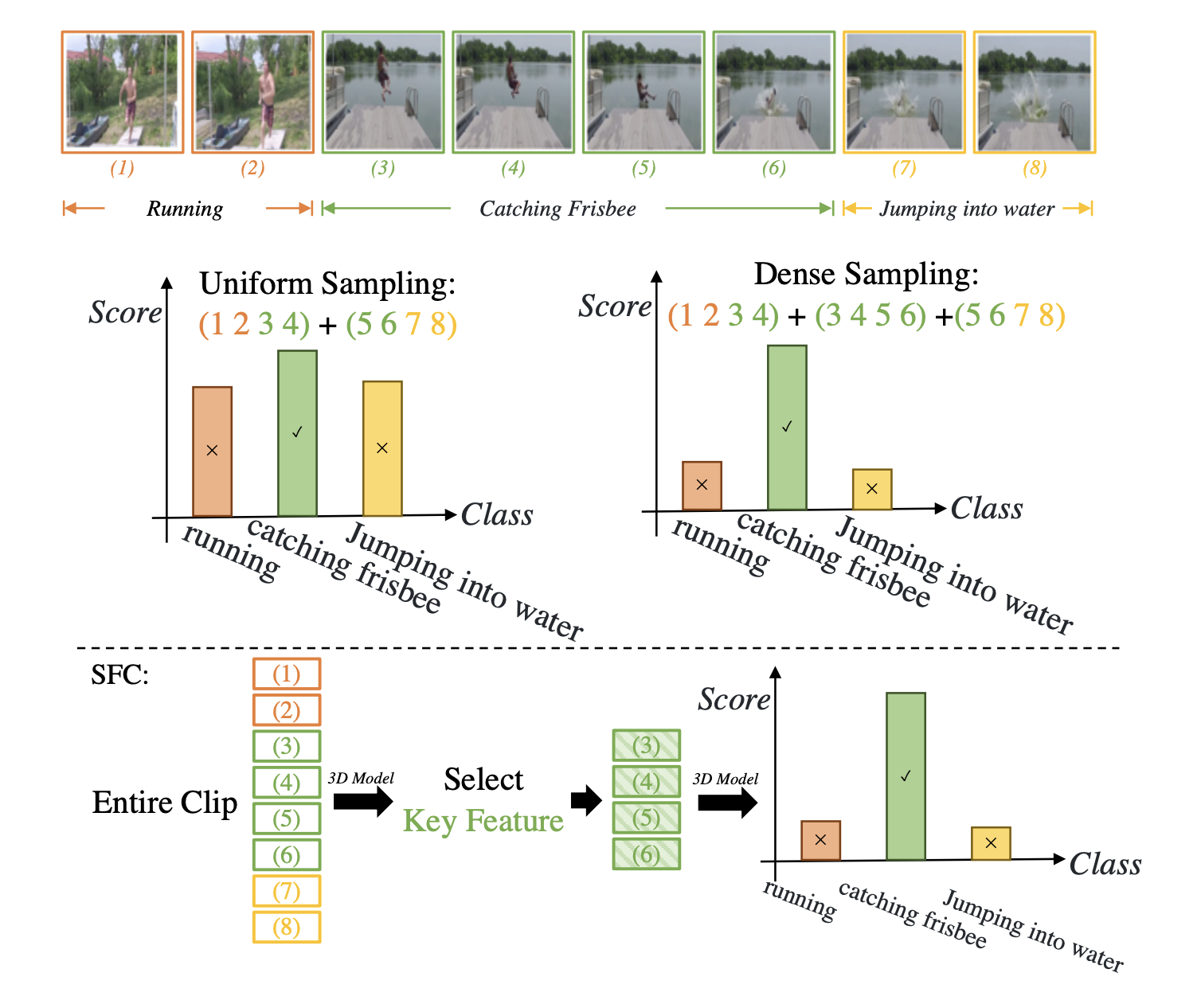

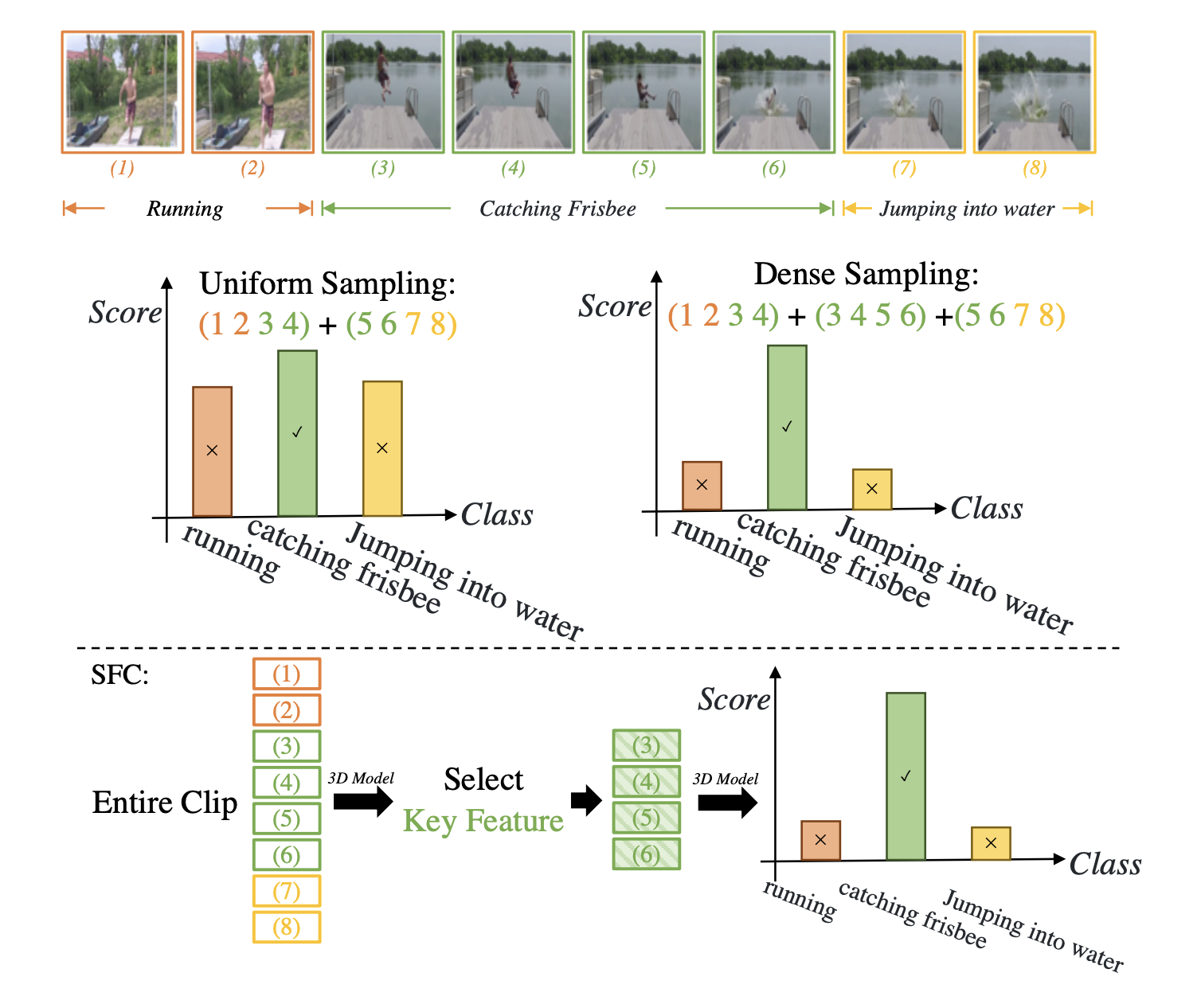

Selective Feature Compression for Efficient Activity Recognition Inference

Chunhui Liu, Xinyu Li, Hao Chen, Davide Modolo, Joseph Tighe ICCV, 2021 Paper Utilizing transformers as spatial feature sampler, achieve 6x faster inference without performance drop. |

|

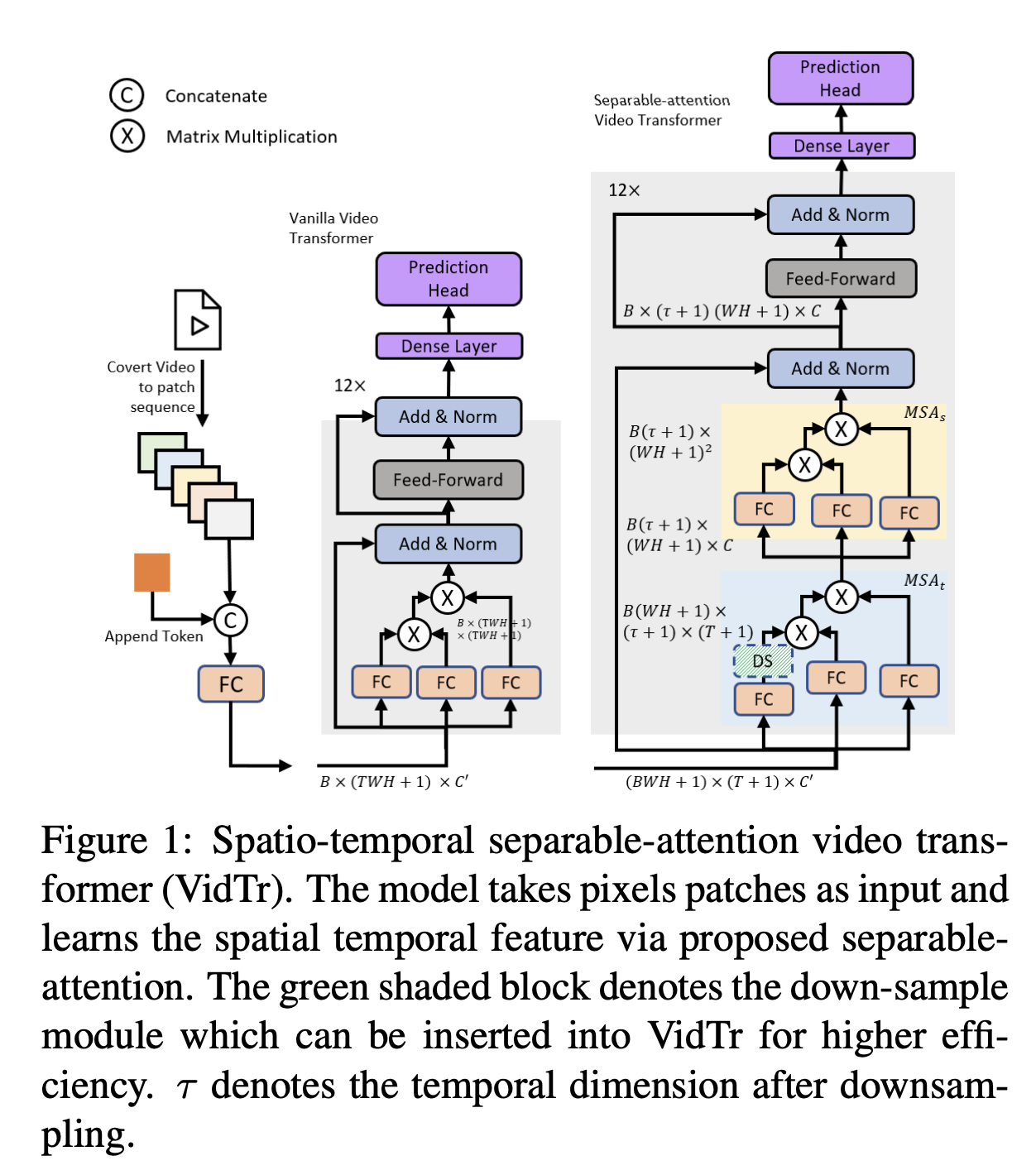

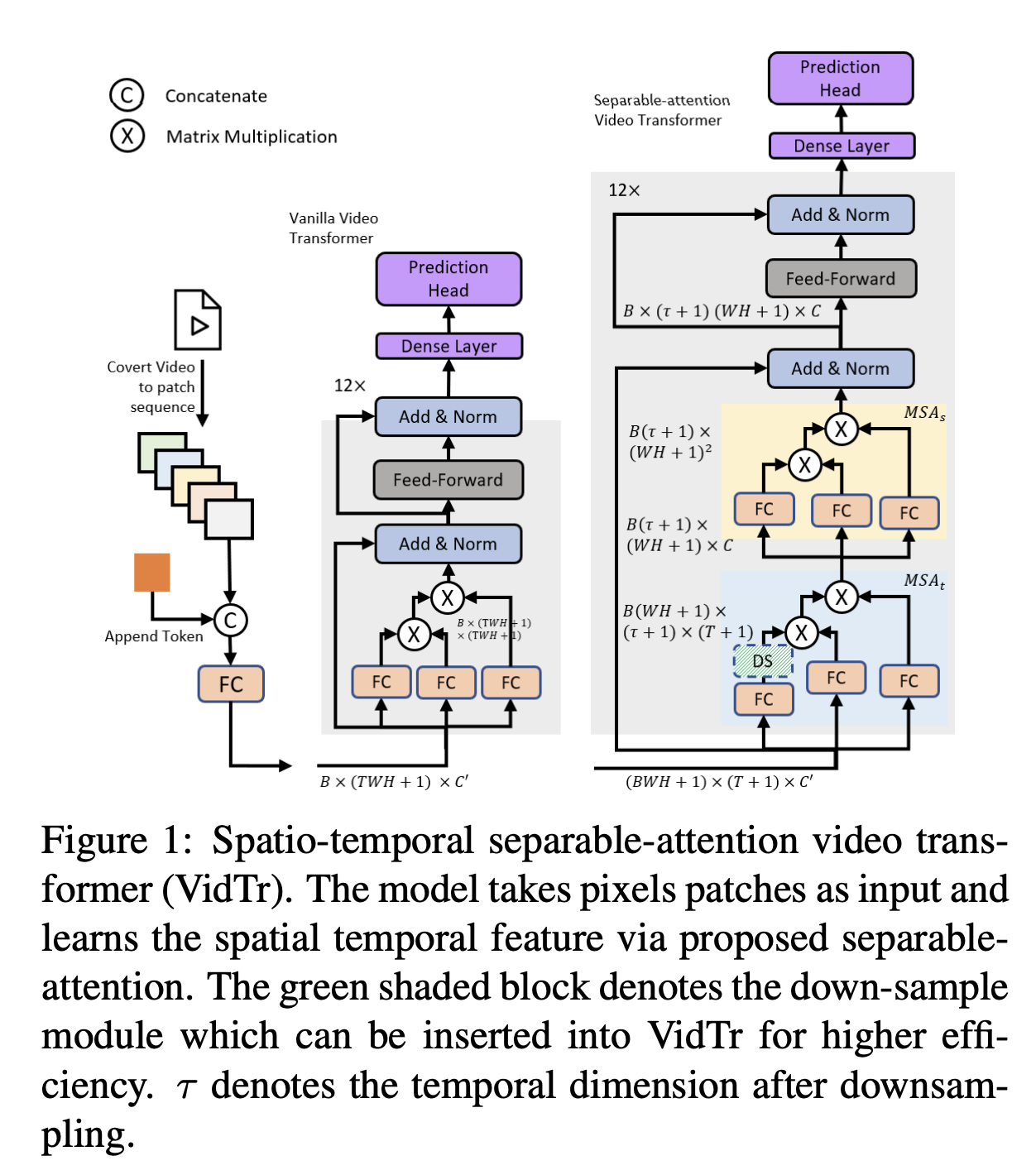

Vidtr: Video transformer without convolutions

Yanyi Zhang, Xinyu Li, Chunhui Liu, Bing Shuai, Yi Zhu, Biagio Brattoli, Hao Chen, Ivan Marsic, Joseph Tighe ICCV, 2021 Code / Paper One of the earliest works to use the transformer architecture for action recognition |

|

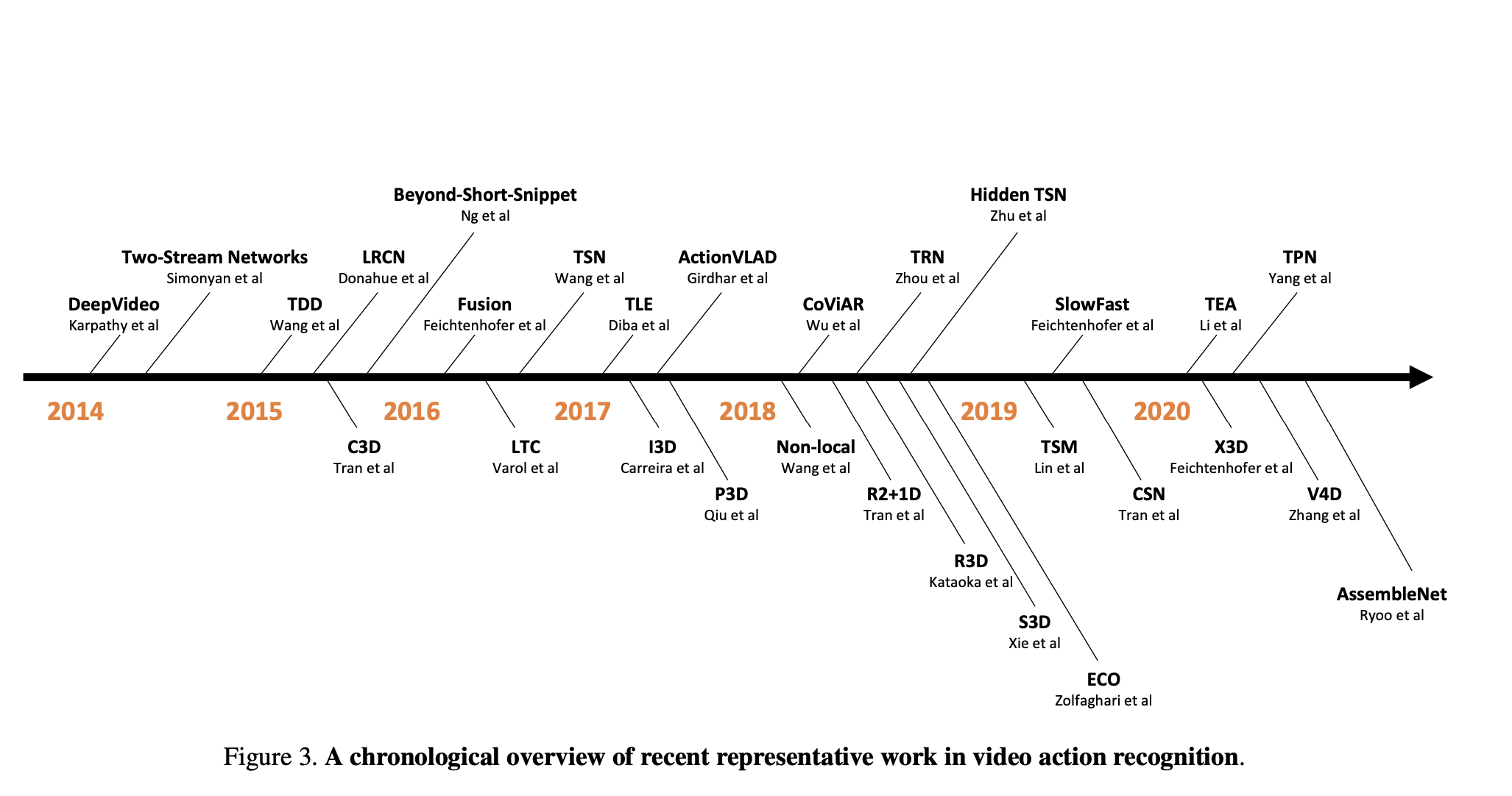

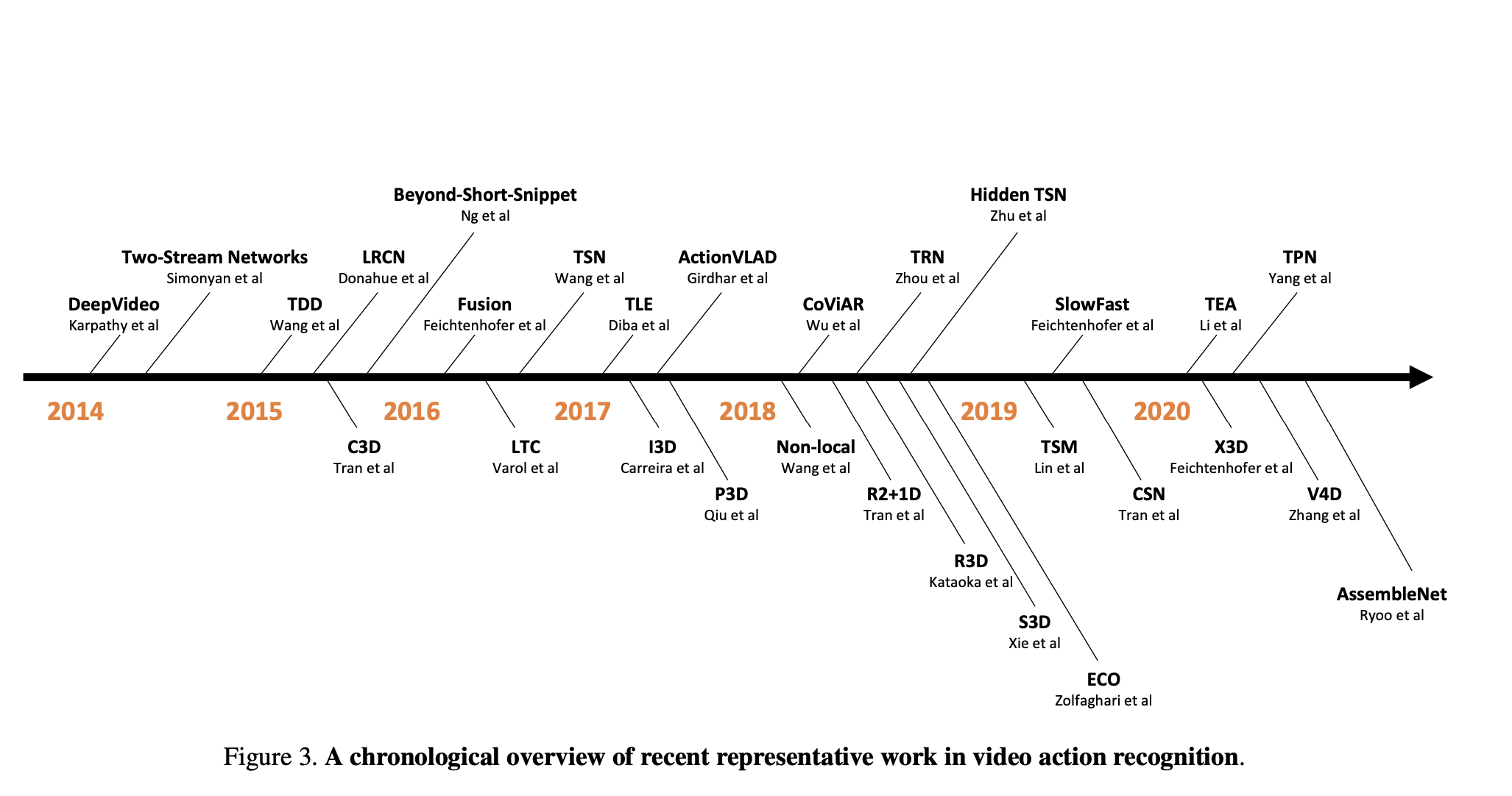

A comprehensive study of deep video action recognition

Yi Zhu, Xinyu Li, Chunhui Liu, Mohammadreza Zolfaghari, Yuanjun Xiong, Chongruo Wu, Zhi Zhang, Joseph Tighe, R Manmatha, Mu Li Arxiv, 2021 Code / Paper / Tutorial We present a survey paper that summarizes 16 datasets and 200 existing papers on action understanding. Additionally, we provide tutorial workshops and a complete codebase to help newcomers join this field. |

|

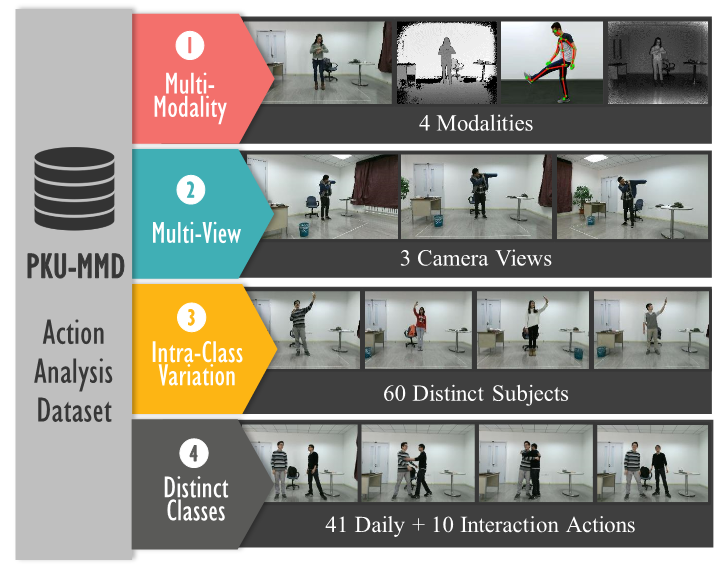

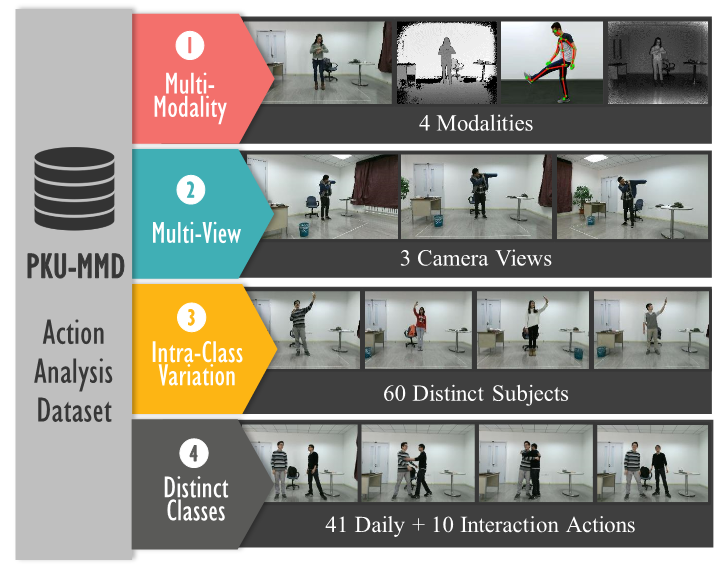

PKU-MMD: A Large Scale Benchmark for Continuous Multi-Modal Human Action Understanding

Chunhui Liu, Yueyu Hu, Yanghao Li, Sijie Song, Jiaying Liu ACM MM Workshop, 2017 Code / Paper / Project Page / Dataset A skeleton-baded action detection dataset. |

|

This template is forked from source code. |